Why AI Needs Aristotle: The Lyceum Project

The following is an excerpt from a blog posted at the Institute for Ethics and AI about The Lyceum Project, a conference being hosted in Athens on the 20th of June 2024. The conference will feature discussions by leading philosophers and journalists and will include a special address from the Greek Prime Minister, Kyriakos Mitsotakis, as they address the most pressing question of our times – the ethical regulation of AI.

I feel like we are nearing the end of times. We humans are losing faith in ourselves.

These words were uttered by the acclaimed eighty-three year old Japanese animator and filmmaker, Hayayo Miyazaki, in a video clip that was posted recently on the social media platform X. Earlier in the clip Miyazaki was shown sitting across a conference table with a group of chastened-looking technologists. The group was seeking, as one of them explained, to “build a machine that can draw pictures like humans do”. “I am utterly disgusted”, Miyazaki rebuked them. “If you really want to make creepy stuff, you can go ahead and do it. I would never wish to incorporate this technology in my work at all. I strongly feel that this is an insult to life itself”. One of the computer scientists seemed to wipe a tear from the corner of his eye as he replied, apologetically and a little implausibly, that this was “just our experiment... We don’t mean to do anything by showing it to the world”.

This episode powerfully encapsulates a conflict that goes to the spiritual crux of our current moment in the development of AI technology. On the one hand, there is the drive by technologists, technology corporations, and governments to create sophisticated AI tools that can simulate more and more of the paradigmatic manifestations of human intelligence, from composing a poem to diagnosing an illness. For many of them, the ultimate goal, at the end of this road, is Artificial General Intelligence, a form of machine intelligence that spans the entire spectrum of human cognitive capabilities. On the other hand, there is the dreadful sense that this whole enterprise, for all its efficiency gains and other supposed benefits, is an affront to our human nature and a pervasive threat to our prospects of living a genuinely valuable human life – “an insult to life itself” in Miyazaki’s words.

The conflict just described stems from the fact that human intelligence, in all its formidable reach and complexity, has long been considered the locus of the special value that inheres in all human beings. It distinguishes us from artefacts and non-human animals alike. But if machine intelligence can eventually replicate or even out-perform human intelligence, where would that leave humans? Would the pervasive presence of AI in our lives be a negation of our humanity and an impediment to our ability to lead fulfilling human lives? Or can we incorporate intelligent machines into our lives in ways that dignify our humanity and promote our flourishing? It is this challenge, rather than the rather far-fetched anxiety about human extinction in a robot apocalypse, that is the most fundamental ‘existential’ challenge posed today by the powerful new forms of Artificial Intelligence. It is a challenge that concerns what it means to be human in the age of AI, rather than just one about ensuring the continued survival of humanity.

Some take the view that the AI technological revolution is creating a radically new reality, one that demands a corresponding upheaval in our ethical thinking. This outlook can foster a sense of helplessness, the feeling that technological innovations are accelerating at an exponential rate, with radically transformative implications for every aspect of human life, while our ethical resources for engaging with these developments are pitifully meagre or non-existent. We reject this pessimistic and disempowering view of our ethical situation in the face of rapid technological change. We do already have rich ethical materials needed to engage with the challenges of the AI revolution, but to a significant degree they need to be rescued from present-day neglect, incorporated into our decision-making processes, and placed into dialogue with the dominant ideological frameworks that are currently steering the development of AI technologies - ideologies centred on the promotion of economic growth, maximizing the fulfilment of subjective preferences, or complying with legal standards, such as human rights law.

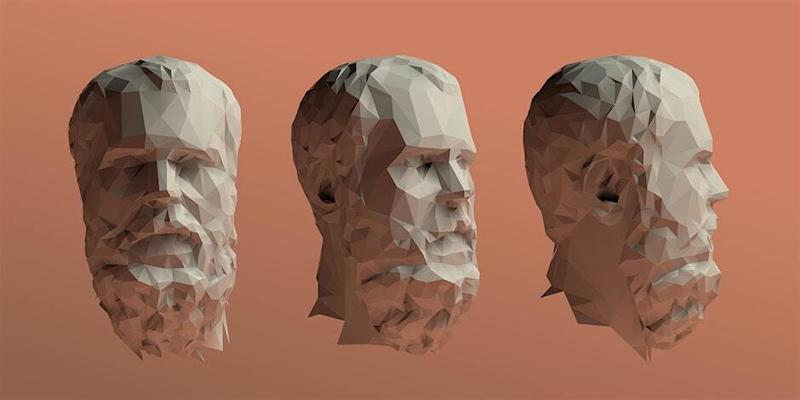

Surprising as it may seem, our contention is that the basic approach to ethics developed by the 4th Century BC Greek philosopher, Aristotle, and subsequently built on by many later thinkers over the past 2,400 years, offers the most compelling framework for addressing the challenges of Artificial Intelligence today.

Read the rest of this article at the Institute for Ethics in AI